No, I'm not going to rail on about Darfur again. Google “cultural genocide” and scroll down through five pages of outcries against genocide real (Armenia, Tibet) and imagined (post-slavery white Southern society, lesbian families), and you’ll run into an interesting blip. About ten years ago, the Deaf community in America accused scientists of cultural genocide for encouraging cochlear implants in deaf children as young as six months. The Deaf are not known for mincing words, and their choice of the term “genocide” may have seemed hyperbolic at the time, but their approach has apparently worked. Though children are being “implanted” (yikes) regularly now, this threat to Deaf culture and language appears to have been subverted and conquered.

Between ninety and ninety-five percent of children born deaf are born to hearing parents. The other five to ten percent are born to deaf parents, and therefore are usually raised with sign language and enveloped in Deaf culture and community from an early age. Those born into the hearing world have traditionally taken a different path, one that usually goes something like this: an infancy of gazing at your parents' moving lips and furrowed brows, an early childhood of visits to audiologists and frequent fittings and refittings for hearing aids, followed by admission into a deaf school, the discovery of a sign language and a whole bunch of people who use it, and then a life divided between love of home and family and the irresistible attraction of a community that signs. This is a gross simplification, but one I doubt many deaf people would deny is basically true.

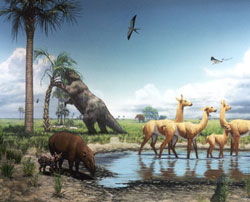

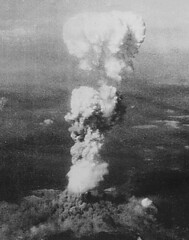

This won't hurt a bit ...

And here lies the landmine of the cochlear implant. Suppose we take all those deaf children born to frantic, hearing parents and remove them from that path, implant a device in their heads (at as young as six months) to make them hear, and tell the parents to keep them away from sign language. Disregarding for a moment the fate of those children, what happens to the community? Within a single generation, perhaps two, the entire community of the deaf would be decimated, putting at risk its language, its culture, and a good deal of its history. A few generations later, sign language would be about as significant as Esperanto, and the culture, history, and humor of the Deaf would be utterly lost, there being nothing left of deaf people but “Children of a Lesser God” and some interesting documentaries from the early 21st century. Okay, perhaps “genocide” is not so hyperbolic.

This situation lends itself to all kinds of analogies, most of which are misleading. The most common comparison thrown out by audiologists and hearing parents of deaf children is, “If the child were born without a leg, we’d give him a prosthesis, right?” Yes, of course. But drawing connections between physical handicaps and deafness muddles the reality of what it is to be deaf. Many deaf people reject being called "handicapped." The PC relativist phrase "differently abled" generally makes me gag, but when the deaf use it, they have a point. Consider this. Imagine a group of blind people suddenly called into existence in central Africa a few thousand years ago. No offense intended, but they’d be lion chow in a matter of weeks, no matter how great their ingenuity. Imagine a community of paraplegics. Probably the same outcome. Imagine a community of autistics--same thing. But a community of deaf people suffering the same miserable circumstances would have very likely survived, prevailed, and ultimately prospered just as hearing communities did. (In fact, the earliest forms of sign language may have been developed by early African hunters trying their best in the brush to make the lions dinner instead of fatter.) Hearing doesn’t do humans much good against predators. If you hear the lion coming, you’re doomed already.

In terms of "ability," hearing served us primarily by providing an effective means of communication, and the deaf found an alternate means, one comparably effective before the invention of the telephone (which the deaf would not have invented prior to the television). It's interesting that the inventor of the telephone, Alexander Graham Bell, was an ardent advocate of eliminating deaf society through sterilization and forced assimilation. Maybe if he'd invented a videophone he would've been a little more openminded. There's little in humankind's list of achievements today that could not have been achieved in the absence of sound. Music doesn't count--sorry, Amadeus, it's a trifle compared to the ability to organize our lives and rule the planet. Besides, many deaf people enjoy music, particularly a good back beat. (I once knew a Deaf high school student who needed constant reminding to remove his headphones in class.) If they'd had to

invent music, however, it probably would have been a little wanting, like if all the world's cuisine had been left to the British.

Bringing this back to the issue of cochlear implants, I must say that if my five-year-old daughter were tomorrow diagnosed with severe hearing loss, I'd probably scratch together the forty grand and get her the operation. But she's five, and already speaking one language and learning another, and attending a hearing school and acclimating to hearing culture. It's easy to say now, but I do believe I would not have had her "implanted" had she been born deaf. Lack of hearing at birth is not, I believe, a handicap like blindness or missing limbs. It is more akin to--and this is an odd analogy the Deaf community thought up at the height of the implant furor--being born black in a predominantly white society. Not the best of luck, but certainly no reason to go messing with nature.

The most common form of deafness is caused by deformation of or lack of hair cells inside the cochlea, the watery, shell-shaped part of your inner ear. These hair cells serve as the conduit that normally translates motion inside your ear into electrical signals that are then carried by your auditory nerve to your brain, where they are translated into what you perceive as speech, wind in the trees, or Brahms. If your cochlear hair cells are not up to the job, a cochlear implant will replace them with a set of electrodes. Installing this set of electrodes requires drilling a hole in your skull. Making them work requires wearing a magnetic transducer connected by a wire to a microphone whose signals are processed through a computer to simplify them into something your brain can comprehend. Most CI users wear this kit on a belt, though newer technology fits it into a hearing-aid-sized bit you can wear over your ear. It’s proven pretty effective at speech for many users, though the wind in the trees and Brahms are still a ways off. One out of three ain’t bad, though you might wish for either of the other two once you've had your fill of what passes for speech these days.

I worked for several years at a school for the deaf in New York City. Astonishingly, I was hired despite the fact that I’d been signing for less than a year. Whether my skills were even passable at that stage is irrelevant, since no one bothered to test me before putting me in the classroom. I learned quickly, thanks to facts that I was married to a native signer--a hearing child of deaf parents--and that my Deaf teaching assistant refused to speak or to pretend to read lips. I still feel guilty about the students who suffered through the ineptitude of my first year, when I was learning to sign as they were attempting to learn writing and history. My second-year students fared better, and today I am nearly fluent.

At the time I worked at the school, the deaf world was experiencing an upheaval over the issue of “oralism” versus signing in the classroom. Oralism refers to an educational philosophy espousing the exclusion of sign language from deaf classrooms. Adopted by American educators of the deaf in the 19th century, oralism holds that teaching the deaf to speak, read lips, and use assistive listening technology is the best route to integrating them successfully into hearing society. Problems arose as deaf students realized that clear speech was not possible for some among them, that lip-reading is largely a myth, and that hearing aids are good when the kettle is boiling but not much use at a boisterous dinner table. What the deaf also knew is that they possessed, as we all do, an inate mental flexibility--cerebral plasticity--the ability to adapt. Without the ability to hear, the deaf possess a means of communication equal to that of the hearing world, though one at a disadvantage in societies based on verbal expression and auditory reception. (There are instances, in fact, when deaf communication exceeds the efficiency of hearing communication. I can describe the physical layout of a room to my Deaf stepson in less than half the time it would take me to describe it to his hearing sister, and with greater accuracy and comprehension. In my experience, subtleties of emotion are also more effectively expressed in sign than verbally.) Anyway, oralism lost. The school were I worked had been a bastion of oralism, and not long after I left, they were compelled by student protests to institute a sign-language requirement for incoming teachers that would have--just five years earlier--prevented my being hired. A great leap forward in a short span of time.

Compared to bats (which can guide their motion using sonar), snakes (which rely on their ability to see in infrared), and birds and fish (which depend upon a sense of magnetism we cannot mimic even with a compass), we are all disabled. Many deaf people consider themselves as “abled” as they want to be. They do not think of their ears as vestigial appendages handy for jewelry or holding up sunglasses, but they regard the auditory world the way most of us regard the olfactory world: sometimes useful, often amusing, but by no means vital. (There are certainly also many deaf who disagree. In my family we have two deaf men, one who would give almost anything to hear and the other who wouldn’t become hearing if you paid him.) I understand how difficult hearing people find it to fathom this attitude. The fastest education any hearing person can receive is to seek out the local place where Deaf people gather (there is always one) and visit it on the right night. The communication you will witness will be more alive than you have ever seen in room filled with hearing, speaking people. You will feel why so many Deaf fear abandoning the quiet--yet vibrant--world they’ve come to know.

A few points about deafness:

1. You've seen I capitalize the word "deaf" occasionally. I do this when referring to a person or group that is culturally deaf. Simply physiologically deaf gets a small "d." My teaching assistant was one of at least eight brothers and sisters born deaf to deaf parents who themselves had deaf parents. He is Deaf. (In fact, for being from a multi-generational deaf/Deaf family, he gets the much-envied title of "strong Deaf.") If my grandfather finds that even with hearing aids he cannot hear anymore, then he is deaf, not Deaf.

2. Deaf does not mean mute. Many deaf people use their voices all the time, and not just in the company of hearing people. Visit any school for the deaf at lunchtime and you’ll see what I mean.

3. Deaf does not mean mentally retarded or deficient in any way. It’s embarrassing to even have to address this slander, but it doesn’t seem to want to go away. I won’t treat you to a list of all the achievements of deaf people throughout history ... you can Google that. I’d rather point out that this misperception arises out of the obvious fact that deaf people have a hard time understanding what you’re saying--and it doesn’t mean they’re stupid. They can’t hear you. If you found yourself lost on the campus of a deaf school, you might have a hard time understanding the directions you’d get. But that doesn’t make you retarded, does it?

And addressing some common misconceptions about sign language:

1. Sign language is not universal, and there is no reason it should be. If you don’t speak the same language as someone living in Kyoto, why should your deaf friend sign the same language as a deaf person in Kyoto? Signers of different languages do seem to have an easier time understanding one another than hearing speakers of different languages. This may be partly due to the iconic nature of many signs and the fact that signers are accustomed to discerning meaning in gesture. It may also have to do with the fact that most sign languages have certain givens, such as expressing past, present, and future spatially (past is usually either behind--as in ASL--or to the signer’s left--as in British Sign Language). But grammar, syntax, and vocabulary vary so much among deaf signers around the world that we have arguably as many sign languages on earth as we have spoken languages. American Sign Language, or ASL, is merely one of humankind’s many sign languages, though it is probably the most well-understood of them and is undoubtedly the one that counts the most hearing people among its users. Over two million people in America use ASL as their primary mode of communication, making it the nation’s third most popular language.

2. Sign languages were not invented by hearing people who then bestowed this brilliant gift upon a grateful and previously bored deaf population. Sign has existed for millenia and evolved organically over time and across distances, just as spoken languages did. The signed alphabets

were invented and bestowed, however, and they serve as a necessary bridge between sign languages (none of which have any written form) and the languages the rest of us use.

3. When a signer “fingerspells” he or she is spelling a word that has been adopted from spoken language but does not have its own sign. If I want to tell my deaf dinner guests that I’m serving ocelot steak, I need to spell “O-C-E-L-O-T,” because there is no sign signifying that exact animal. (There are signs for “lion,” “tiger,” and so on, and deaf people in Colombia probably have a sign for “ocelot,” but few in North America would know it.) I can sign--not fingerspell--“steak” simply by pinching the flesh between my thumb and forefinger. Many signs are vaguely or overtly iconic. Sign languages are sometimes denegrated as not being real languages because of the iconic nature of some signs. Critics say it’s more gesture and mime than language. The next time a waiter asks what you’d like for dinner, answer by pinching the flesh between your thumb and forefinger. See how iconic sign language is.

4. American Sign Language is not merely English codified into hand and finger movements. That’s called Signed Exact English, and it’s used by many educators of the deaf in a misguided attempt to aid children’s English language comprehension. Very few deaf adults use SEE; it’s cumbersome and inelegant. ASL is fluid and natural by comparison; it has its own grammar. For this reason, it’s impossible to sign ASL and speak English simultaneously without sacrificing the accuracy of one or the other.

5. Braille has nothing to do with the deaf. (This may seem obvious, but you’d be amazed how many people ask me about Braille when they hear I worked as a teacher of the deaf.) Braille is simply the spoken English language expressed in our 26-letter phonetic alphabet and and codified into raised dots. There are no widely used written forms of sign language, owing mainly to the fact that most sign languages are non-linear in nature, conveying meaning through an active tableau of simultaneous hand-shapes, movements, and facial expressions. This level of visual complexity does not translate well onto paper. My class once worked for months on developing a written form of American Sign Language, a project that captured their attention like no other. They invented a set of swoops, curves, and loops--all punctuated with dots and accents showing handshapes and eyebrow position--and found they could cut written English out of the middle and communicate simple sentences directly to one another on paper in the language of their minds. More serious Deaf linguists, teachers, and students have since brought the dream of written sign closer to reality.

So why did the Deaf community freak out over cochlear implants? And why has the shouting died down now?

The early approach to language development in children with cochlear implants mimicked the old oralist approach: avoid sign language as it might inhibit the development of the child’s verbal communication skills. Over the past few years, that approach has lost its primacy, and acceptance of sign language as an option or even a necessity in the lives of “implanted” children has taken some of the steam out of the “implants are genocide” argument. In an article published in 2004 on Audiology Online, Debra Nussbaum

addressed the changing attitudes and pointed correctly to their cause: failure of implants to live up to expectations in a significant number of children:

During the initial era of cochlear implantation in children during the late 1980s and early 1990s, the number of students with cochlear implants was relatively small, and children with implants comprised a fairly homogenous group. Planning for that population was perhaps easier and more well defined. It was assumed that a decision to obtain a cochlear implant involved participation of an educational setting that exclusively utilized an auditory means of communication. The expectation was that all students with cochlear implants would have full access to spoken language.

As we have had the opportunity to take a closer look at the characteristics of students with cochlear implants for almost two decades now, it is become increasingly apparent that there must be more than one definition of an effective program for children with cochlear implants ...

In the fall of 2000, the Laurent Clerc National Deaf Education Center at Gallaudet University in Washington, D.C., established a Cochlear Implant Education Center (CIEC) ... At the time the CIEC was established, sign language for students with cochlear implants was rarely promoted, as sign language was viewed by many medical, audiology, speech-language pathology and education professionals as deterrent to spoken language development. While that opinion continues to be held by some, feedback from families and professionals across the country, and some early research, suggests that there is increasing support for use of sign language for a segment of implanted children. Those continuing to advise against the use of manual communication for children with cochlear implants warn that the use of sign language reduces the amount and consistency of spoken language stimulation for a child, promoting dependency on visual communication, and causing further delay in spoken language acquisition. Those maintaining that sign language can be beneficial to children with cochlear implants believe that with careful attention and planning, spoken language development can be maximized in a signing environment, and that sign language use can support the development of spoken language.

This relatively rapid acceptance by audiologists of the utility of sign language comes as a bit of a surprise to me. The scientific and support community behind assistive listening devices and cochlear implants had a reputation for being a bit hard-headed on the issue of sign language. Perhaps the discrediting and demise of oralism that took place through the 1990’s made the somewhat similar approach of early CI advocates seem untenable. It’s also probable that the community could not continue to shrug off as anomolies the number of early-implanted children who ended up severely language delayed.

In a

rebuttal to a statement from the Alexander Graham Bell Center on the preference of speech as the language modality for implanted children, Nancy Bloch of the National Association for the Deaf writes:

The NAD takes a more holistic approach with its emphasis on early exposure to and usage of sign language as a vital component of the rehabilitation and support services program for implanted deaf children and their families. It is incumbent upon such centers to afford implanted children with all the tools available that can contribute to lifelong success. The reality is that childhood implantation continues to have variable outcomes. For some, it works better than for others. Either way, empirical research has shown that children who maximize use of signing at an early age score higher at a later age on reading and mathematics tests. We have long known the data on the accomplishments of deaf children of deaf parents whose abilities are comparable to and better than their hearing peers. Such children are both fluent in English and sign language. This points to the very real benefits of early signing, given appropriate support for overall language development.

This kind of levelheaded, reasoned approach has proven far more effective than accusations of genocide in bringing the best interests of implanted children back to the top of the agenda. (The hyperbolic controversy of the 1990s should not be dismissed as useless, however, as it probably served to bring visibility to the issue, visibility that made it impossible for the audiology establishment to ignore people like Nancy Bloch.) The new paradigm borne of the compromise resembles an earlier approach to language development in deaf children called “BiBi.” The University of North Carolina at Greensboro’s “CENTeR” for early intervention with deaf and hard-of-hearing children has on its website

a succinct description of six common approaches to language development, including BiBi.

Bilingual/Bicultural (BiBi) emphasizes American Sign Language as the infant's primary language through immersion. Thus, ASL becomes the basis for learning English as a second language. Connections are established between ASL and written English. ASL provides access to the culture of the Deaf community. Individual decision-making about amplification and speech are encouraged.

This approach clearly places more emphasis on sign than most implanted children probably experience, at least until they have failed to succeed in a strictly oral environment. It does acknowledge the reality to which Bloch alludes in her rebuttal regarding deaf children raised in signing environments: they tend to succeed better at mastering English (though not necessarily speech) than those denied sign at an early age, and they tend to perform better academically and in hearing society. I witnessed this effect first-hand in my time teaching the deaf. I was assigned to work with “low-functioning” high-school students, and to the best of my recollection, not one of my students was a child of deaf parents and most of the parents I saw on conference nights did not even sign. The students whose parents were deaf or whose parents had adopted sign effectively into their households were almost without exception “high functioning,” and they went on to succeed academically on the college level both in programs for the deaf and in regular hearing colleges and universities. A Google search on one such student whose name I recall reveals that he graduated from Boston University with a degree in political science and now teaches ASL at Harvard. Not too shabby. Anecdotal, of course, but worth thinking about.

So on the face of it, it looks as if the cochlear-implant controversy has faded away for good reason: implanted children are no longer blindly steered away from signing, and many adolescents and young adults with implants are choosing what is best for them, even if that means attending deaf schools or attempting belatedly to join Deaf culture. Isn’t it nice when things work out? But there’s a slight problem. (If there weren’t, why would I be writing this?) The controversy has not really been resolved, it has merely been postponed. Science will eventually come up with a more effective “cure” for deafness, one that actually repairs the existing biological structures rather than replace them with technological innovations. And then we’re back to the old genocide issue. The Deaf community must face this prospect and arrive at a coherent approach to dealing with it. The hearing world regards deafness as a biological error to be corrected, and it has a strong argument. The Deaf regard the inability to hear as the root of a culture and language whose vanishing would be a great loss to humankind, and they are right.

Suppose that in a decade or two, despite the best efforts of the Bush administration, stem cell research leads to the ability to safely and effectively repair damaged or missing cochlear hair cells and auditory nerve cells. No “implanting” of wires and electrodes in children’s heads. No magnets stuck to their skulls. No little computers they have to wear behind their ears or on their belts. What then? The Deaf community has today been rescued from the menace of cochlear implants partly by its strengths, but mainly by the technology’s own failings.

The Deaf dodged a bullet in the cochlear implant crisis, but the bullet was a dud. How will they--and we--face the inevitable reality that one day there will be a true cure, one that will eliminate deafness, and Deafness?